Bodies in Motion

my studies in motion capture

The time: Fall 2016. The course: Bodies in Motion, taught by Javier Molina and Todd Bryant. The subject: motion capture! This blog chronicles my studies and work in the course, full of magical creations and intriguing discourse.

Week ?, 11/10/2016: Mega-update

It's been a while since I have posted an update, and a lot has happened. My Master's thesis project needed some attention, so I have been focusing on that. The election served as another source of distraction. Work has been done for Bodies in Motion, just not well documented. This post shall bring that progress to life.

I got a chance to play around with a system called Perception Neuron, a wireless motion-tracking controller meant for video games and low-fidelitiy motion capture for physiology research and animation. I enjoyed its spacial and financial afordability as compared to having a deciated room with an Optitrack system, but I wasn't a fan of its fragility and lack of precision. Each of the tiny accelerometers on the controller have to be coddled to avoid damage from electromagnetic radiaton, impacts, and improper insertion into the suit. With a proper MOCAP suit using Optitrack, if a marker gets damaged, it's very easy to get a new one. The rigid bodies we use are 3D printed and covered in reflective tape, which might be hard to reproduce if we lacked a 3D printer, but those things are pretty tough once they are made. The Perception Neuron also does not track absolute position: it's all relative. This is fine for simple getstures or small animations, but for a whole live performance piece, I have my doubts with its accuracy. Since we have access to Optitrack, I want to take the opportunity to make something great with it. But it looks cool, though.

I got a chance to set up the Optitrack with the full MOCAP suit. That's not me in the suit, but I learned how to ready the software environment and to direct others to ensure proper alignment of the IR-markers onto the suit.

The group project is developing in a much more directed trajectory. Our project, "The Umwelt," aims to look at how the fundamentals of reality vary between different individuals in an ecosystem. In our first iteration, we thought about using MOCAP and interpretive dance to create a live performance about an encounter between predator and prey. Our project has since pivoted due to our lack of experience with choreography and the difficulties of coordinating with people who do. Instead, we are sticking to our group's strengths - experimenting with technology, script writing, and theatre - to create a multimedia stage performance experience. The script is in the works, but basically it will be a one-act play between two characters, the actors for which will be suited up in MOCAP suits. The play will be about a famous artist struggling with his fame, shortcomings, and other "inner demons," as represented by the other actor. The actors' interactions will be interpreted in two different ways and projected on two opposite walls. One projection will have a "real world" scene set up in Unreal, with the two actors controlling two characters in the scene. This projection serves as an extension of the stage -- adding scenery and other vantages impossible to the viewer, such as a news helicopter and cell phone footage of onlookers. The second projection will be an abstract interpretation of the artist's emotional state and will be in a constant flux throughout the performance. The actors will still control characters, but their appearence will not reflect the "real world," but instead an introspection, something onlookers could not see themselves. Here is a link to our previous presentation on our aethetic choices, but today we will have our press kit completed with more complete details on the performance.

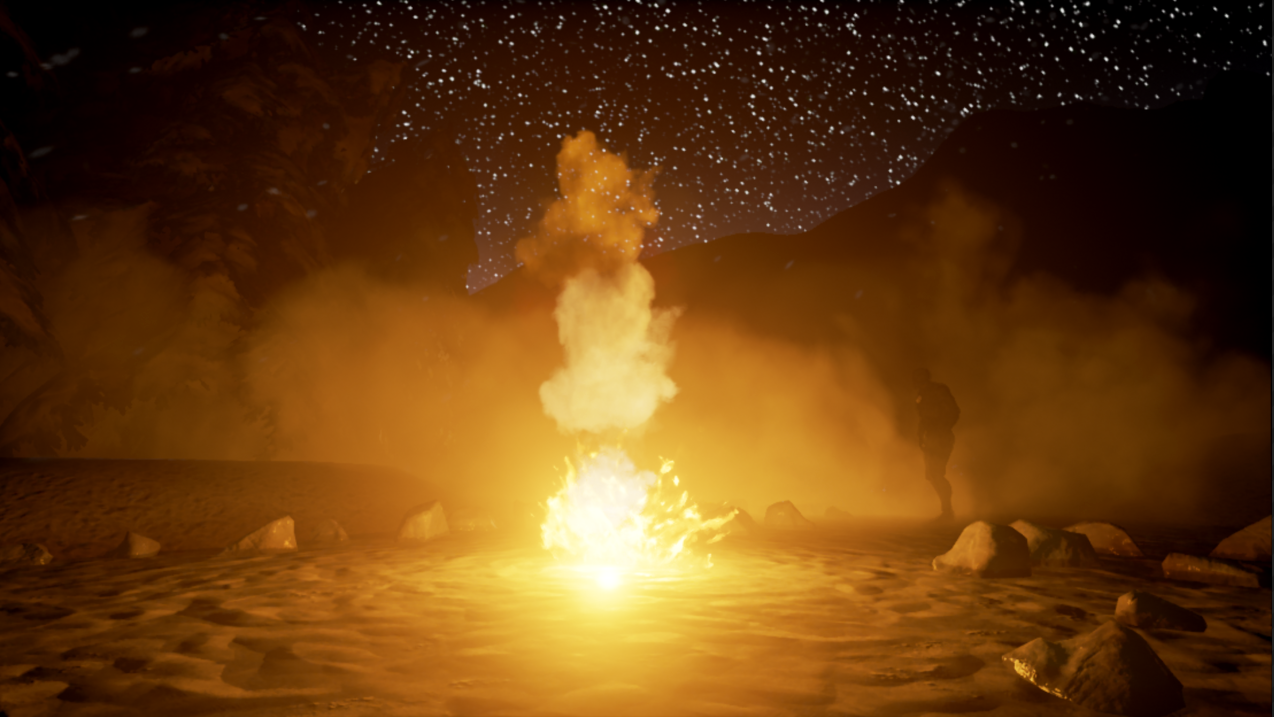

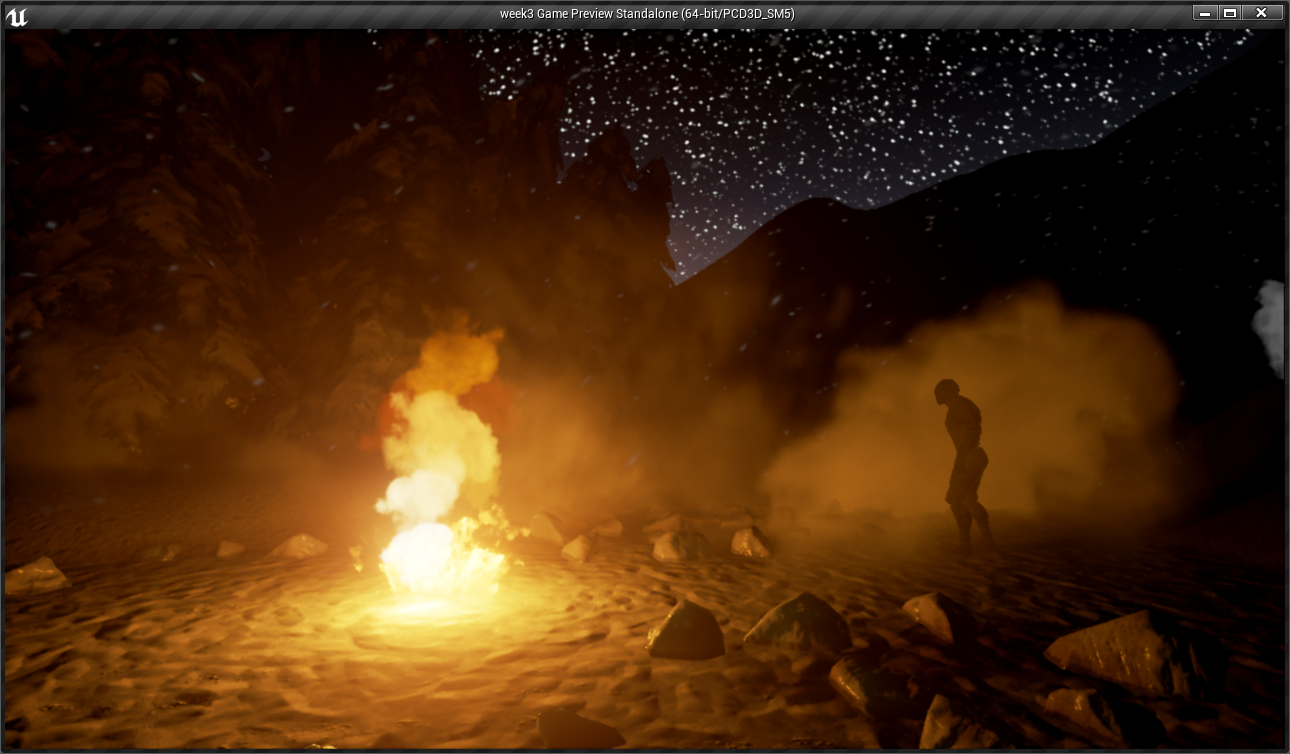

As a teaser, here is the scene constructed for the "real life" projection.

Week 4, 8/6/2016: Animation in Unreal 4

My group put together a simple scene in Unreal to test out animations and meshes. I was tasked with creating some kind of interaction in the scene. I took each of the the 4 models and animations given to me and made 20 of each and put them in groups. I put each group around the scene, experimenting with different combinations of animation start times, rotations, and levels with each group. Then I made each group invisible until the player character walked into a group's trigger volume.

Week 3, 8/1/2016: Screen Capture and Interaction in Unreal 4

I added three interactions to my Unreal scene, each using a simple trigger volume. Two change the state of the player character, and the third teleports the layer character to another level.

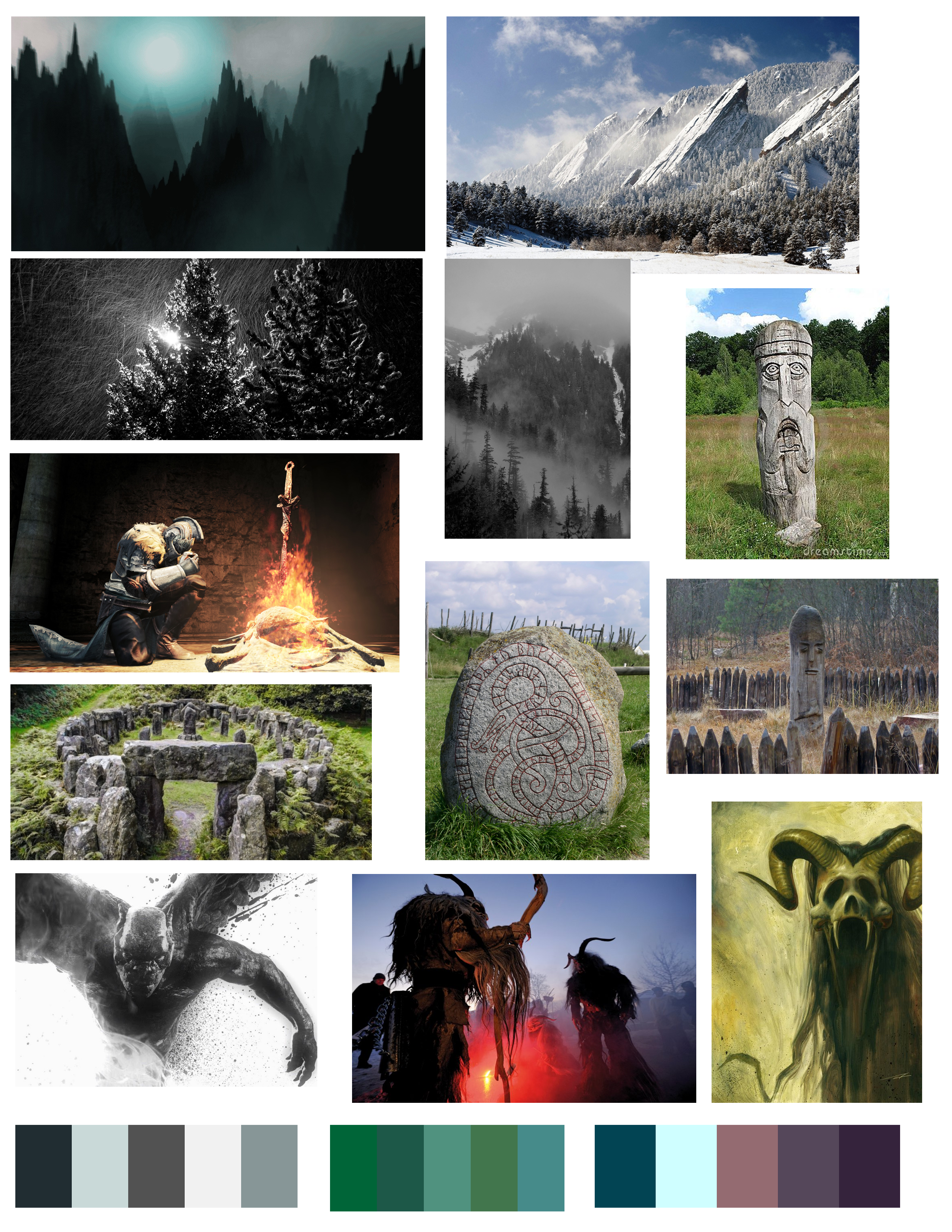

I also created a mood board for my intended aesthetic for my project. I'm not sure how this is going to work out now that I'm in a group, but I'll post it here anyway.

Week 2.5, 9/29/2016: More playing with Unreal 4

I finally finished the exercise for this week to a level of execution that I would not be embarrassed to show publicly. Here are some screenshots of my Unreal 4 scene.

Week 2, 9/15/2016: Brainstorming project ideas and playing with Unreal 4 Editer

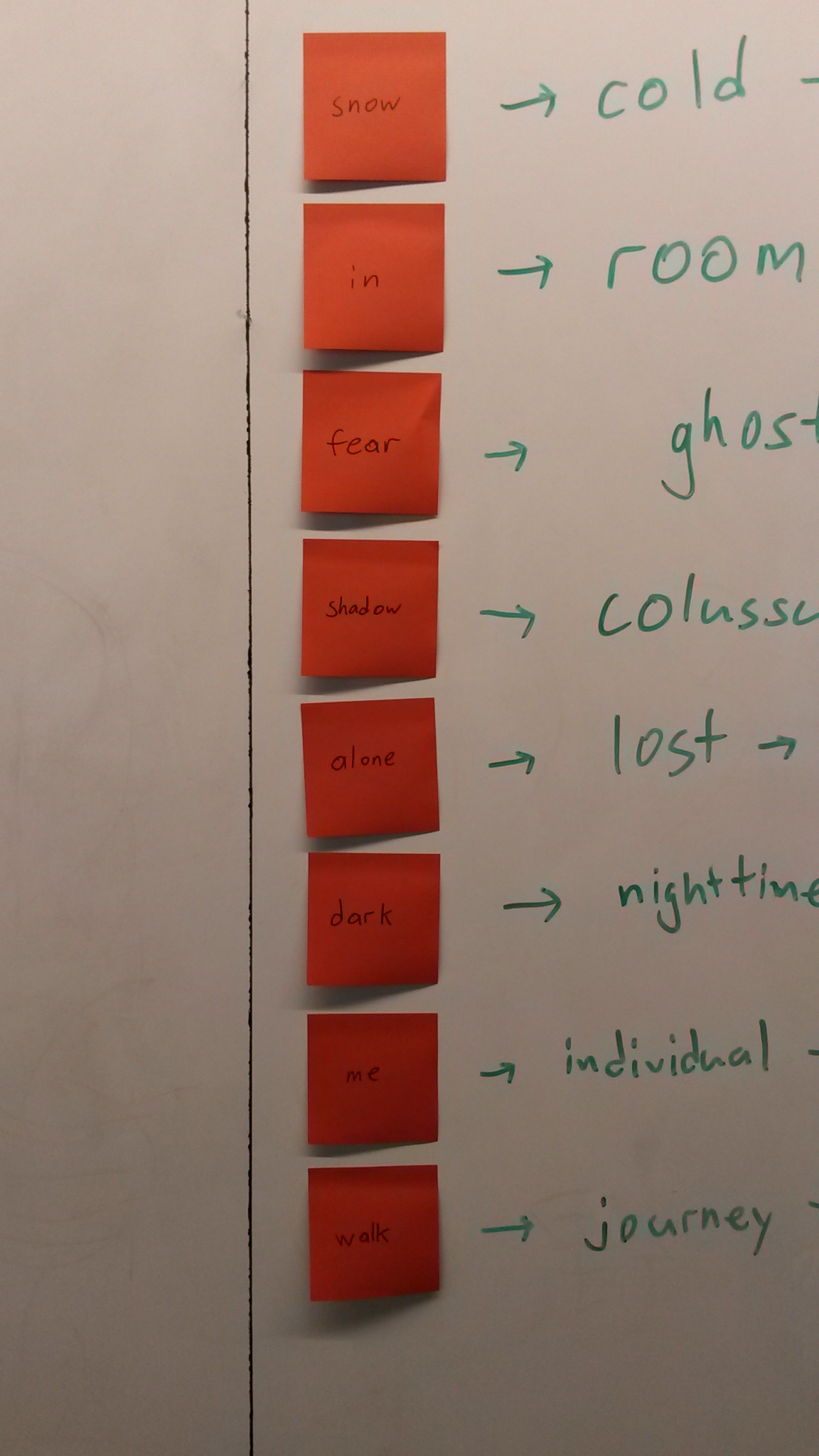

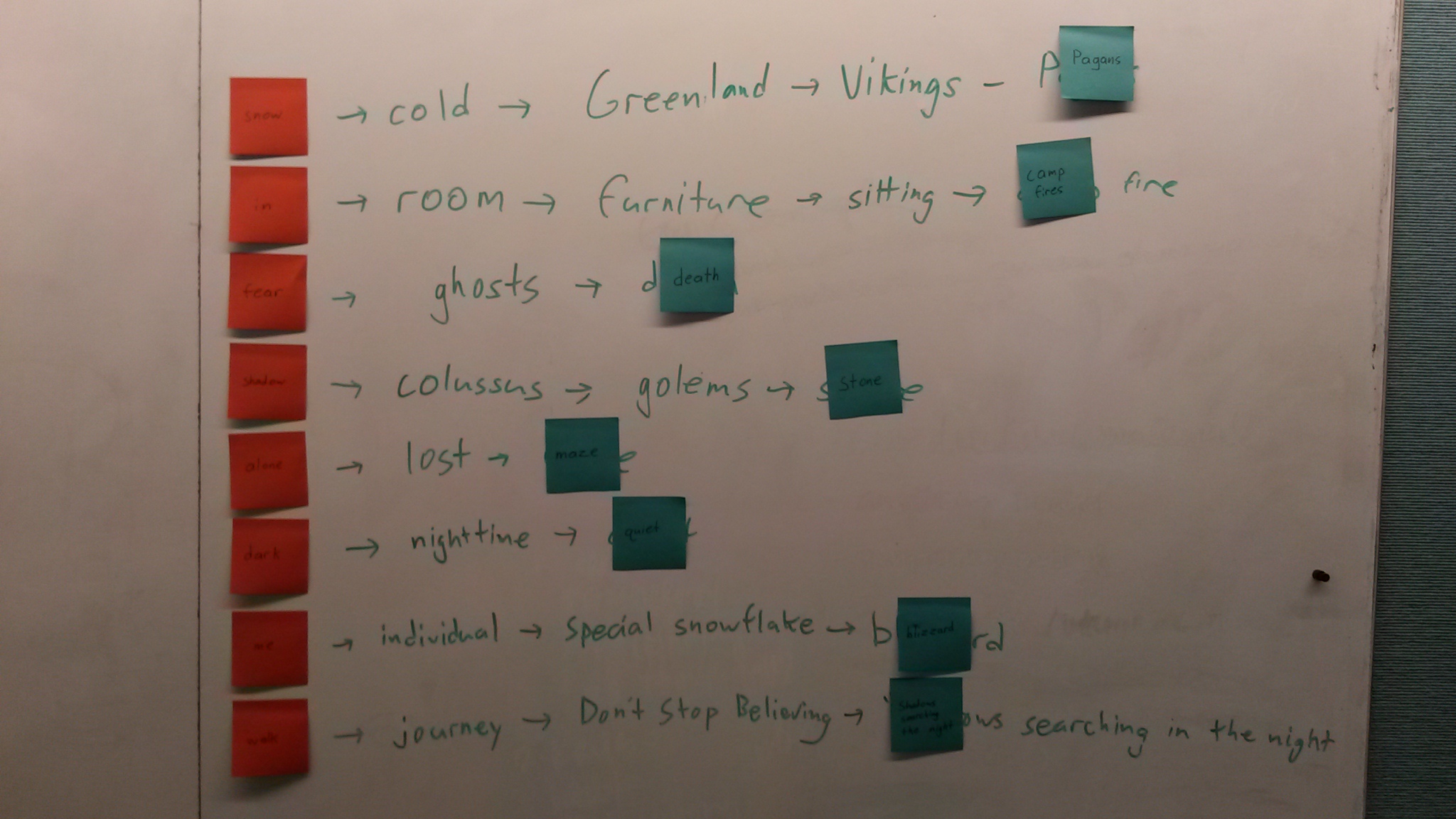

This week we worked on coming up with interesting narratives, themes, and activities to experience in VR. In brief, I started with a poem given to me at the end of class. After reading it a couple of times, I picked some key words that stood out to me. They weren't the most ... nuanced words from the poem, but they were the words I thought could instigate a brilliant brainstorm session.

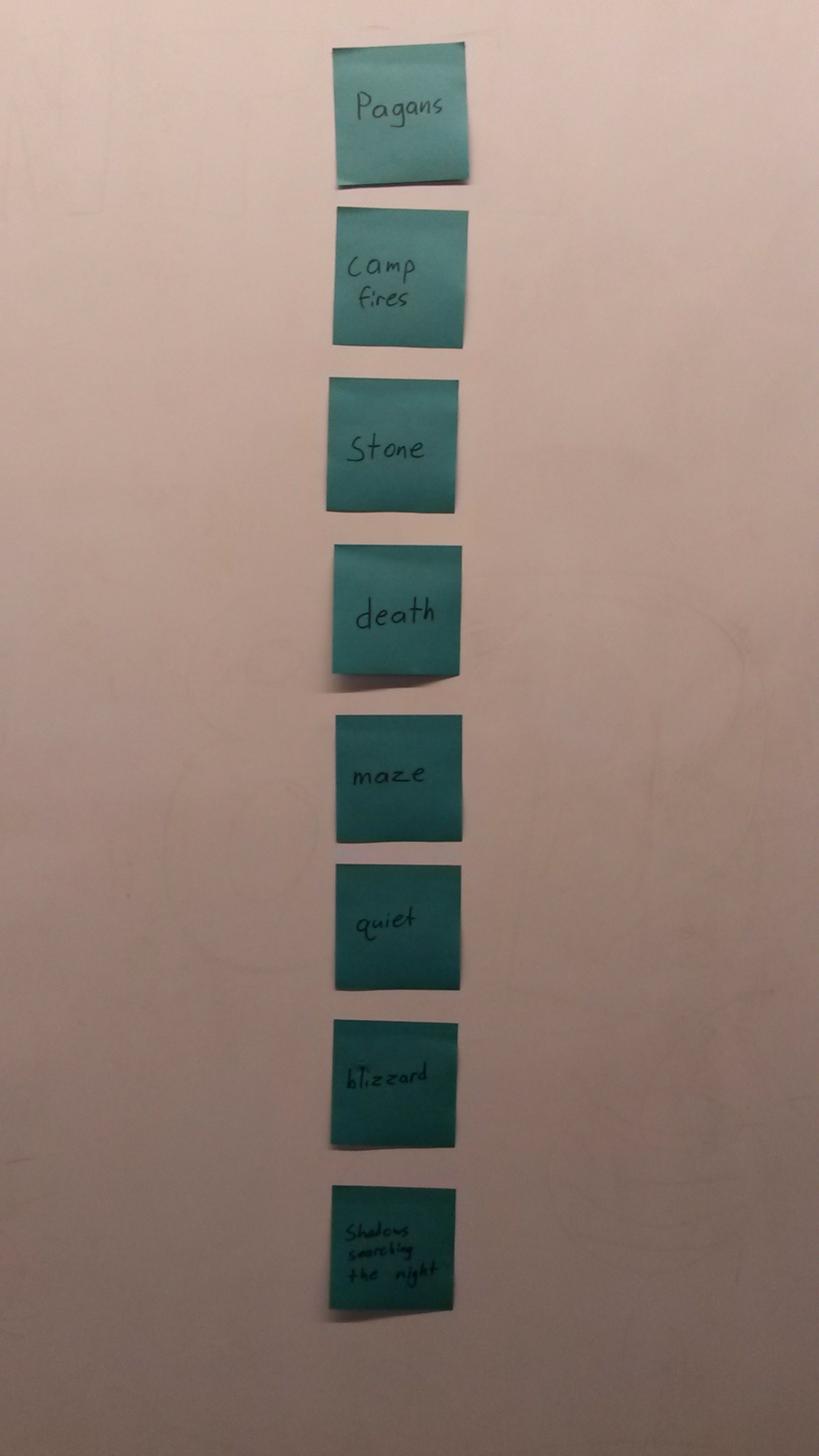

With these eight words, I played the "ABC Game." I look at a word, and then instantly, without putting too much thought on it, write down another. Then I repeat for that second word to create a third. "A makes me think of B, B makes me think of C."

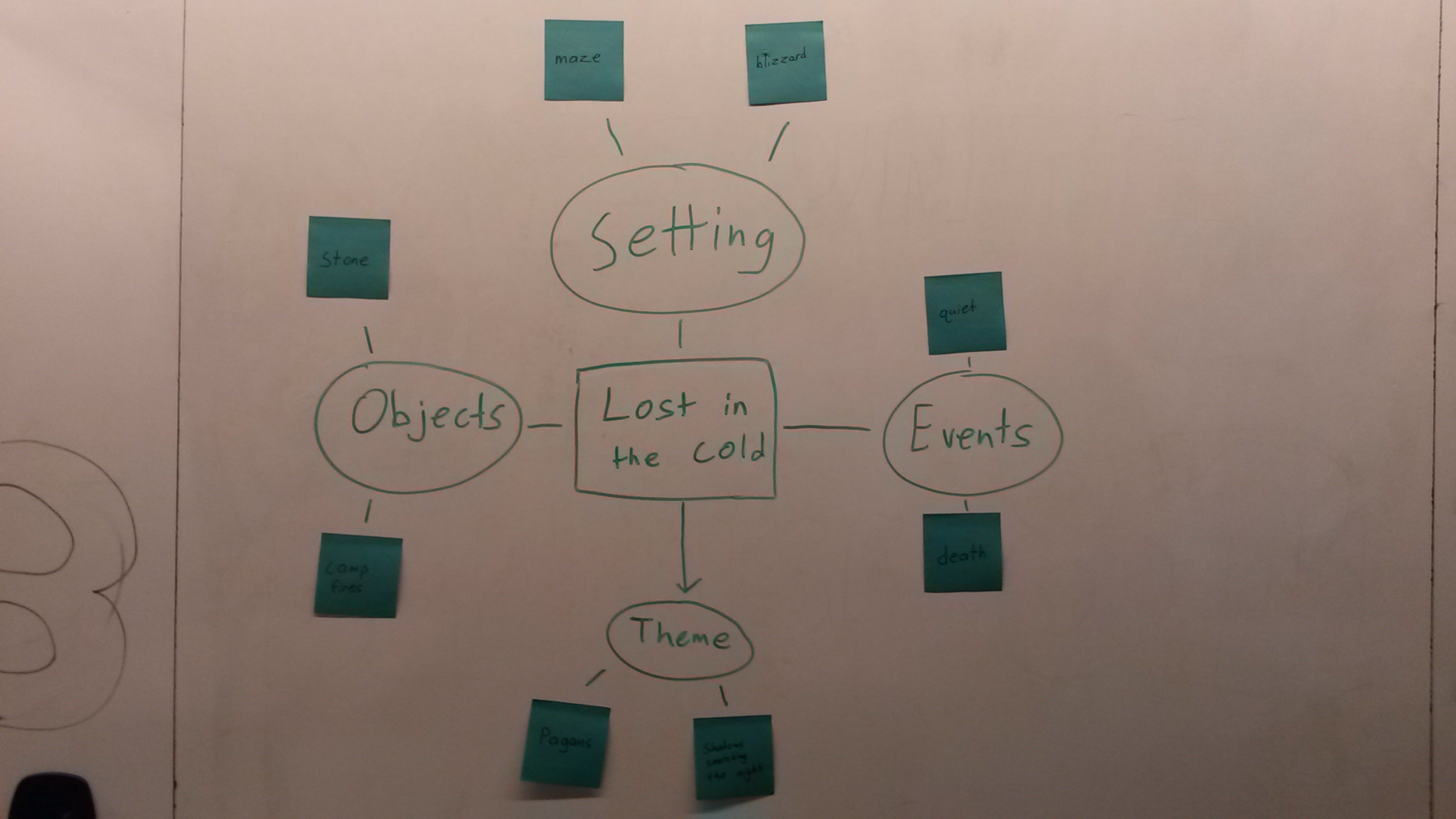

I selected the final words from each iteration of the ABC Game, and arranged them into a web.

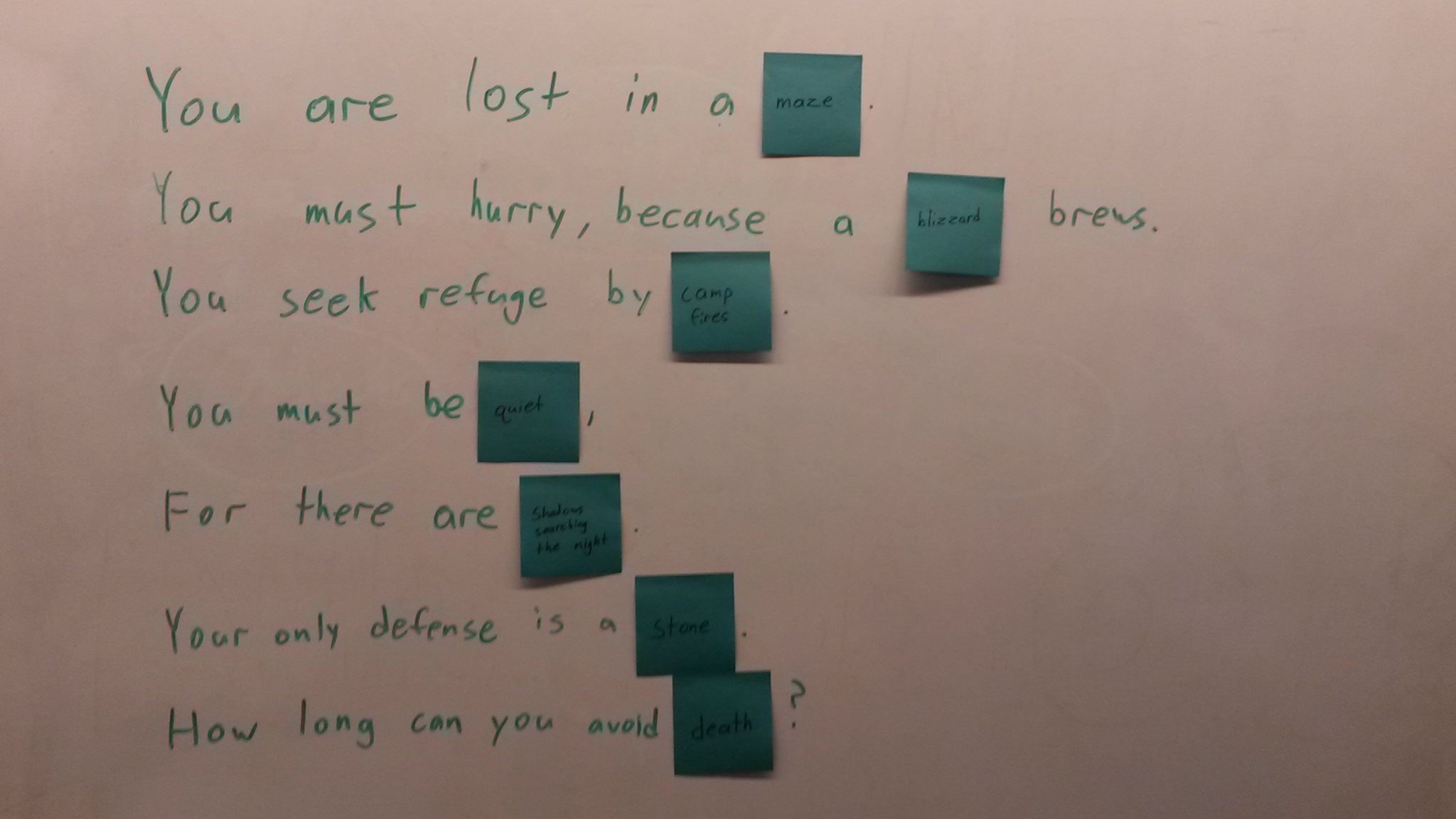

For added bonus I created a narrative with the final words.

Week 1, 9/8/2016: Introductions and MOCAP room tutorial

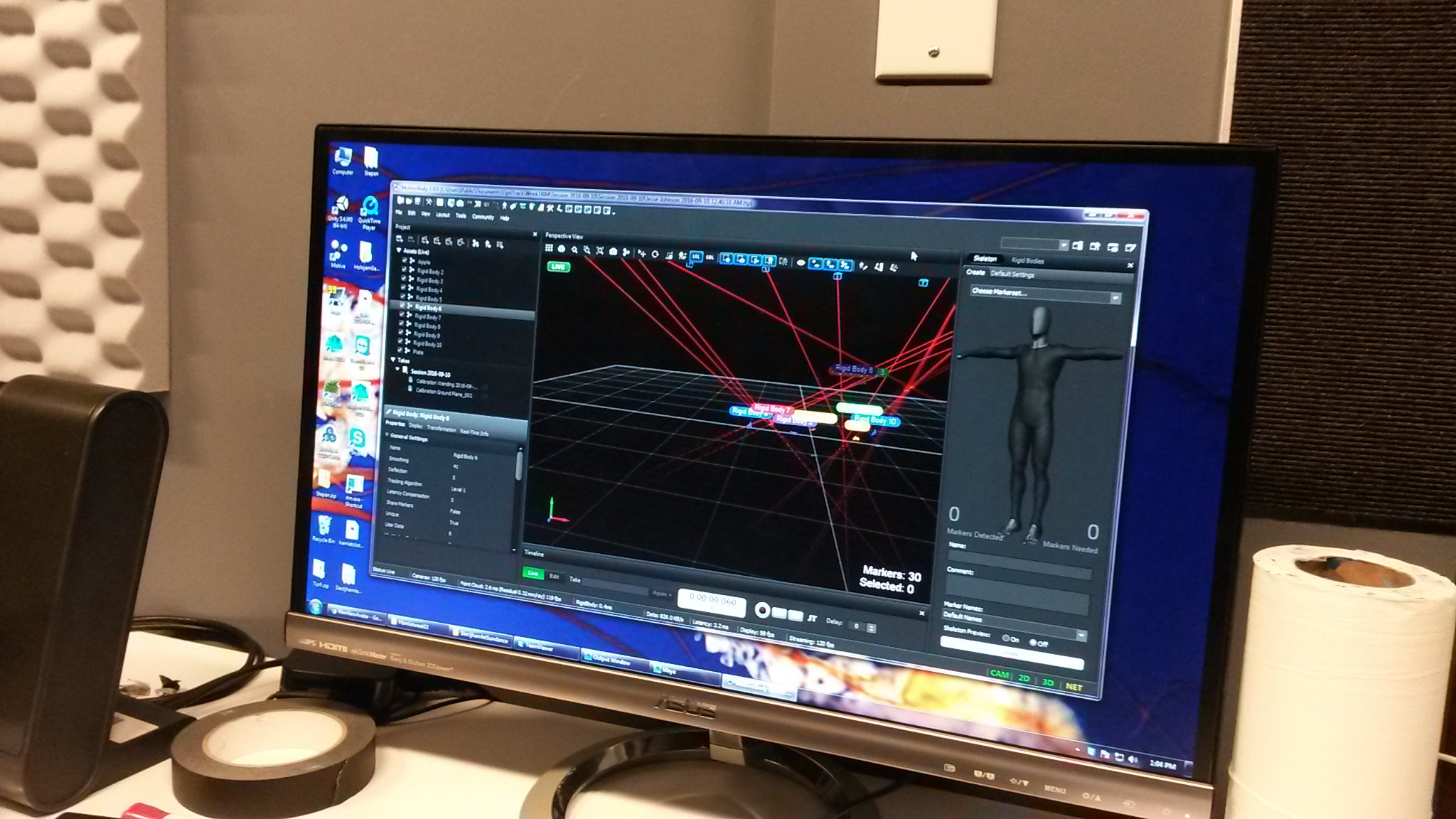

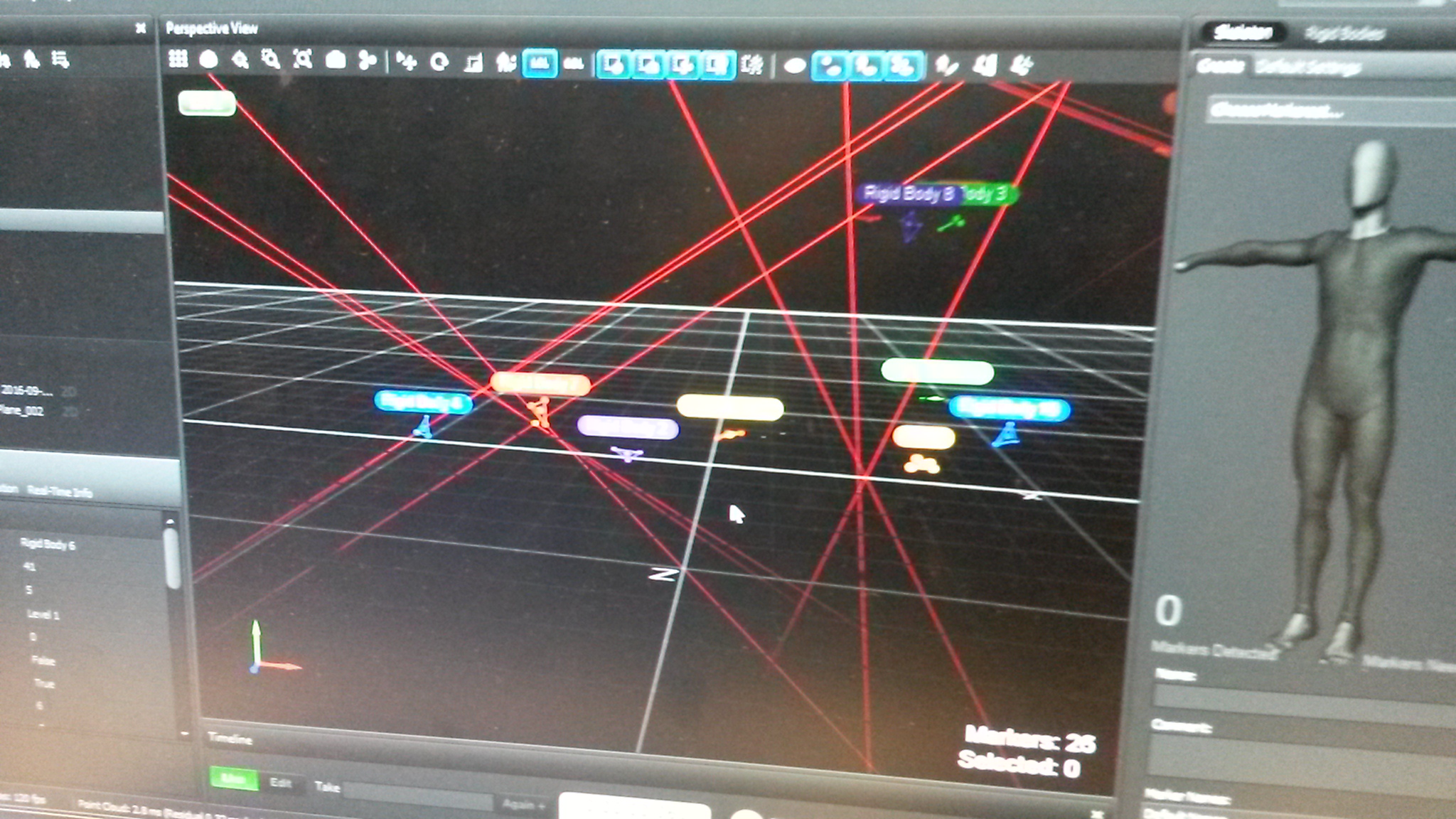

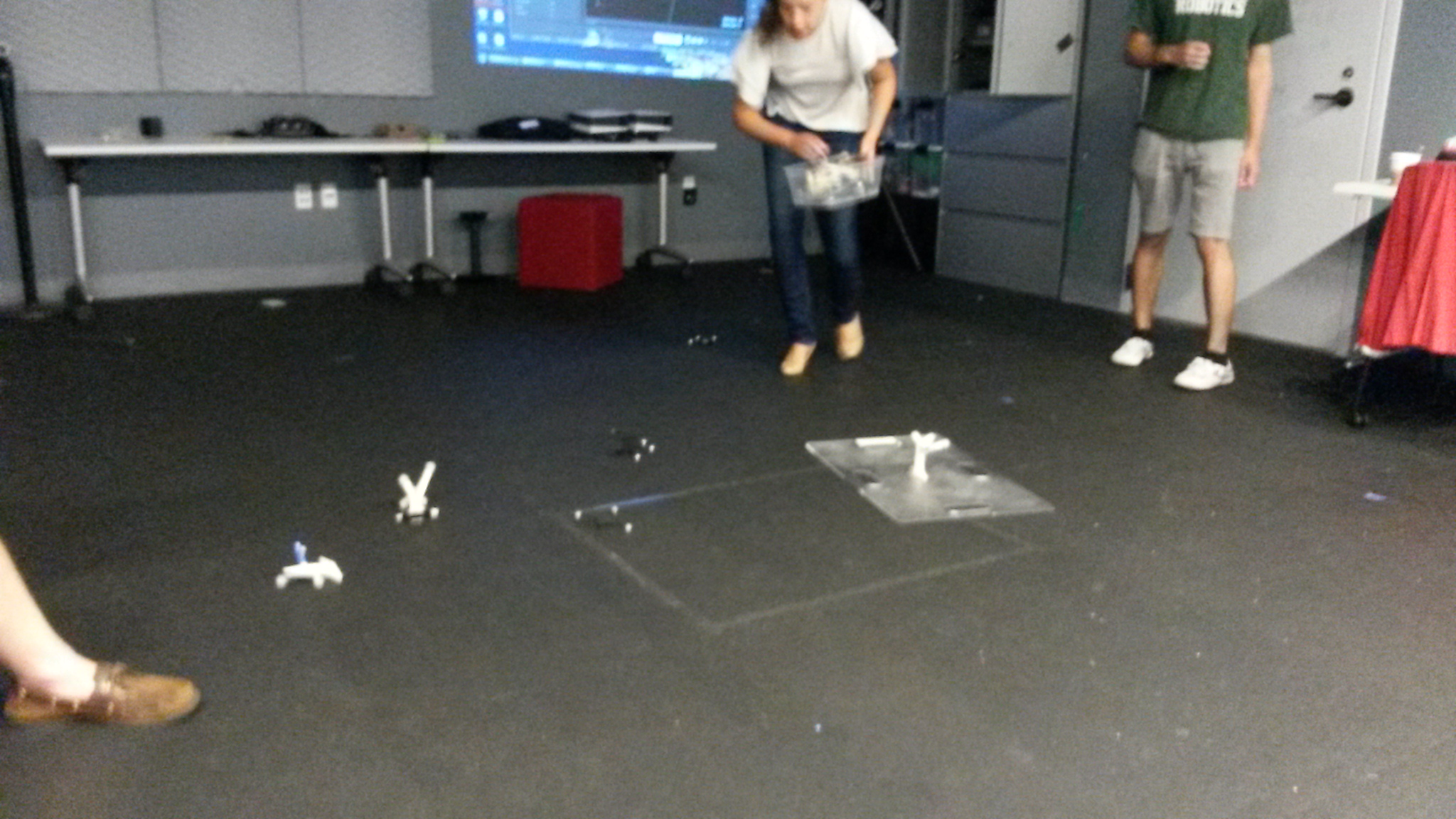

This week we learned how to use the Motive software to calibrate the IR cameras in the MOCAP room. With Motive, I was able to easily orient the cameras, establish a ground plane, and create rigid bodies (a unique arrangement of three reflective points that the cameras use to track solid objects as they move about the space).